We tested whether sleep wearables actually agree — analyzing 77,000+ nights across six devices, plus same-person, same-night comparisons to isolate true device differences.

Sleep duration lines up, sleep stages don’t — most trackers cluster around ~7 hours of total sleep and are usually within ~15–22 minutes on the same night, but deep/REM/light can diverge by hours between devices.

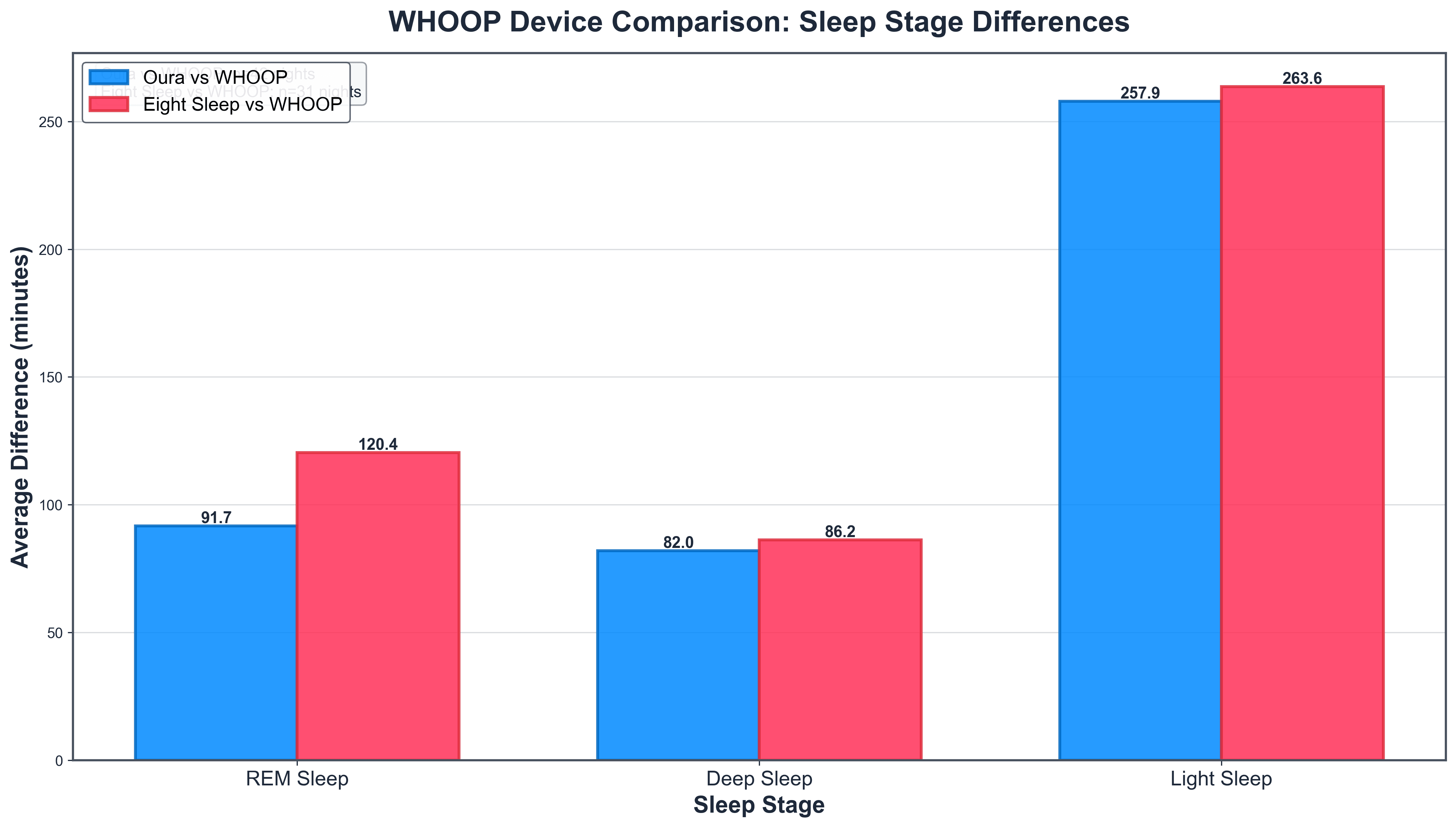

Key takeaway: sleep stages don’t match across devices — on the same nights, trackers differed by ~32 min (deep), ~23 min (REM), and ~38 min (light).

Sleep Tracking

Sleep Metric Variation Across Wearables

By Alistair Brownlee, Faraaz Akhtar and Halvard Ramstad

January 22, 2026

WHOOP Data and Direct Multi-Device Comparisons

After we published the sleep data comparison a few weeks ago, we had quite a lot of requests on social media from WHOOP users eager to see how their device stacks up. So we're excited to include WHOOP in this updated analysis.

Building on our previous population-level exploration of sleep data from Oura, Fitbit, Apple, and Garmin, we incorporate fresh insights from direct head-to-head comparisons—nights where the same individuals used multiple devices simultaneously.

The dataset spans November 17 to December 17, 2025, encompassing over 77,000 filtered main sleep episodes (excluding naps and durations outside 4-13 hours). This real-world aggregation from thousands of users offers a robust view of consumer sleep patterns and device performance.

The Crucial Distinction: Population-Level vs. Direct Comparisons

A core limitation of population-level analyses, such as our earlier one, is that they compare averages across different user groups. For instance, if Eight Sleep users average longer sleep than Apple Watch users, is that because the smart bed's temperature regulation genuinely extends sleep duration, or because Eight Sleep attracts a demographic more committed to recovery (e.g., biohackers or those with sleep issues who invest in premium solutions)? Similarly, differences could stem from lifestyle factors, age, or even users' motivation to wear/track consistently.

These ecological views are valuable for understanding broader trends among tracker users, but don't isolate device-specific effects. That's where multi-device nights change the game: by controlling for the individual (same person, same night, same sleep), we can directly assess algorithmic differences in detection and staging.

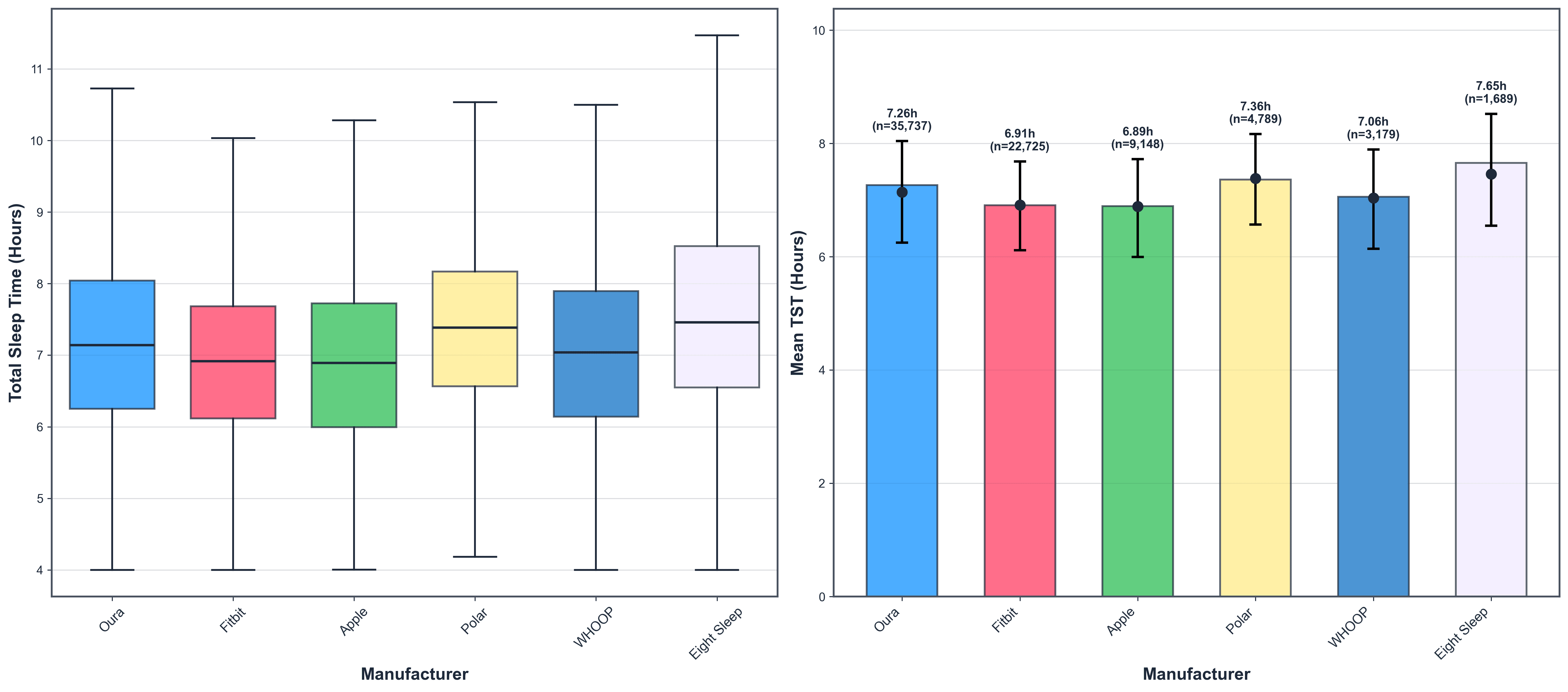

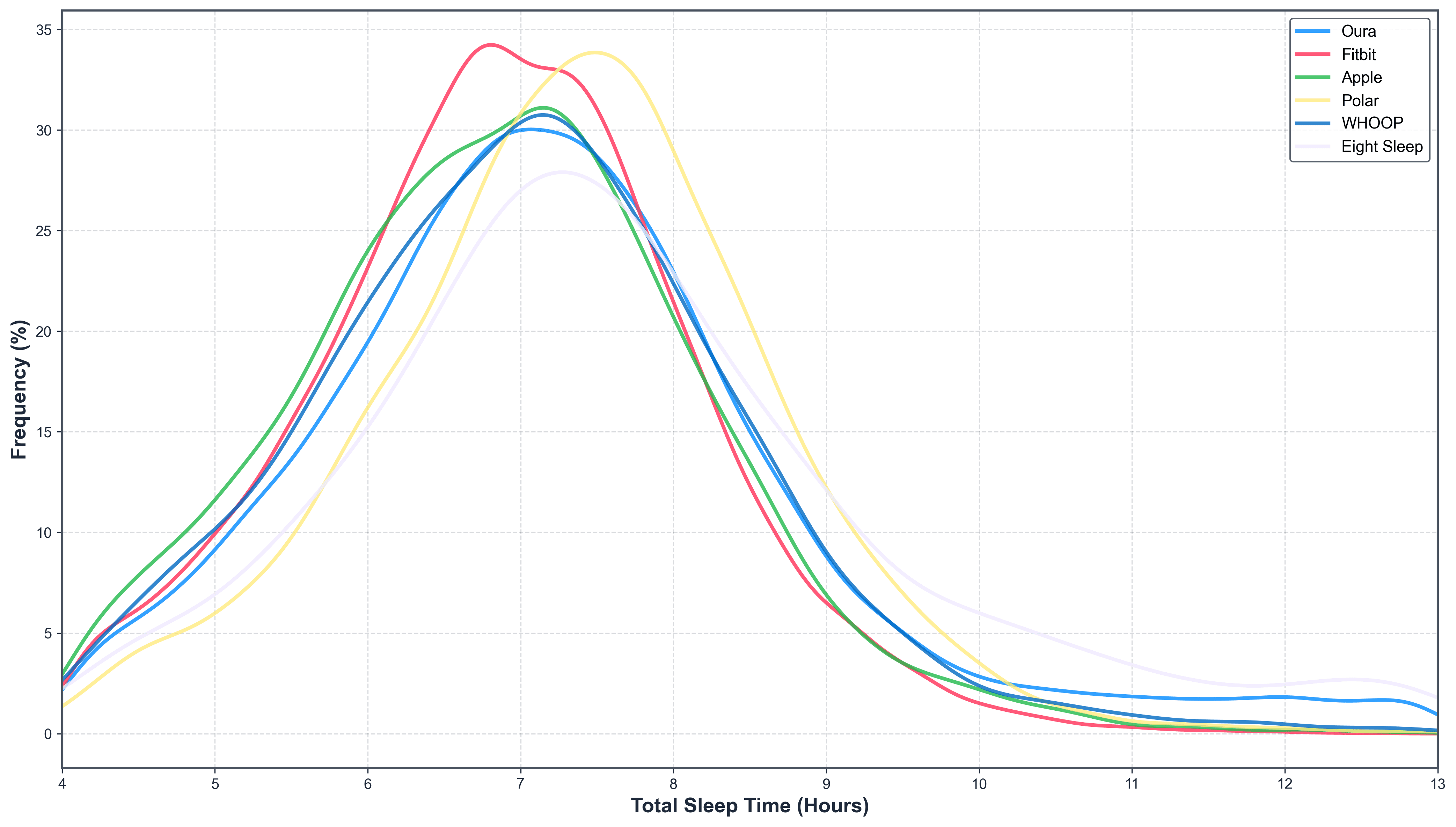

Population-Level Insights: A Solid ~7 Hours, With Notable Variations

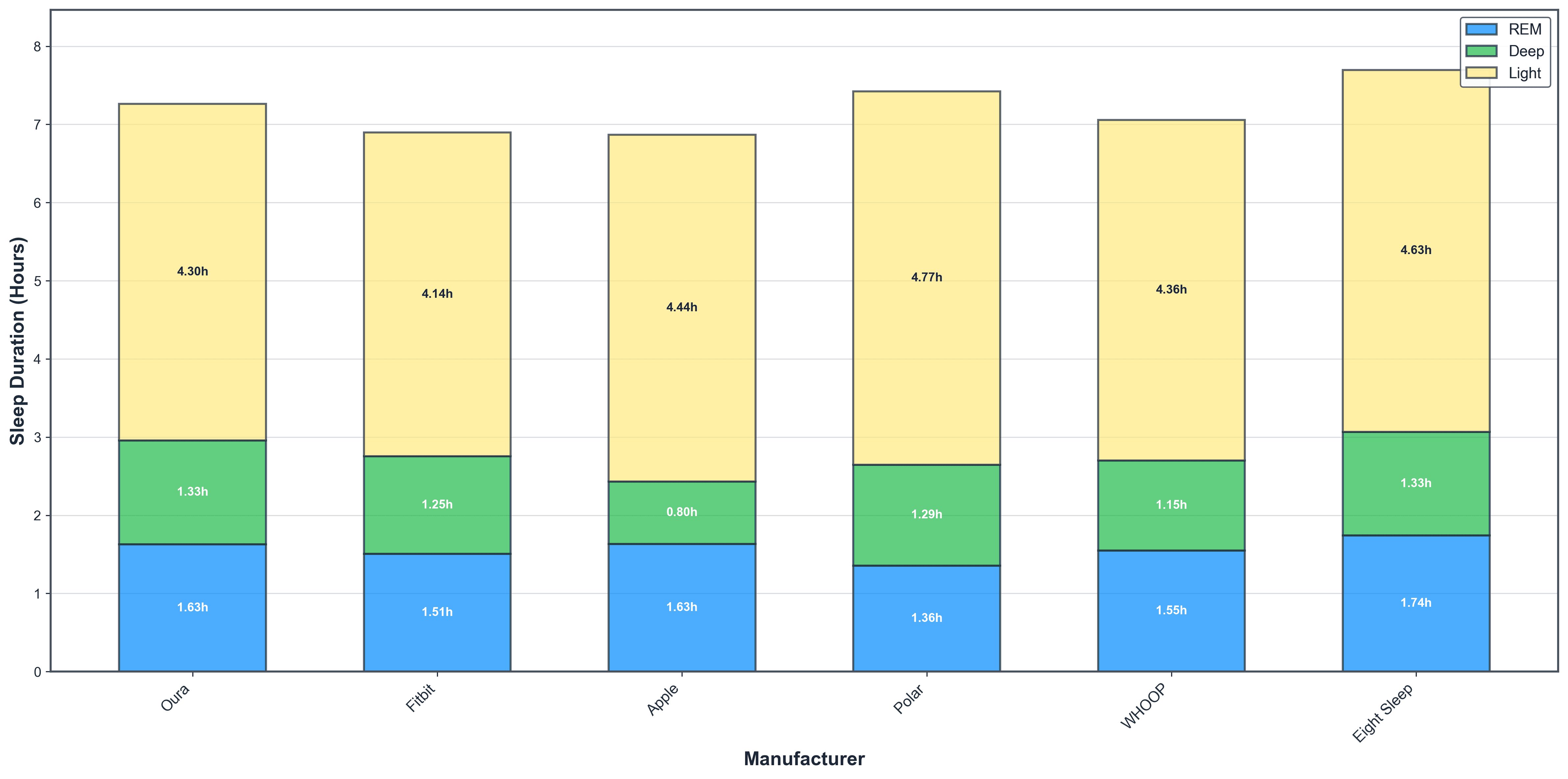

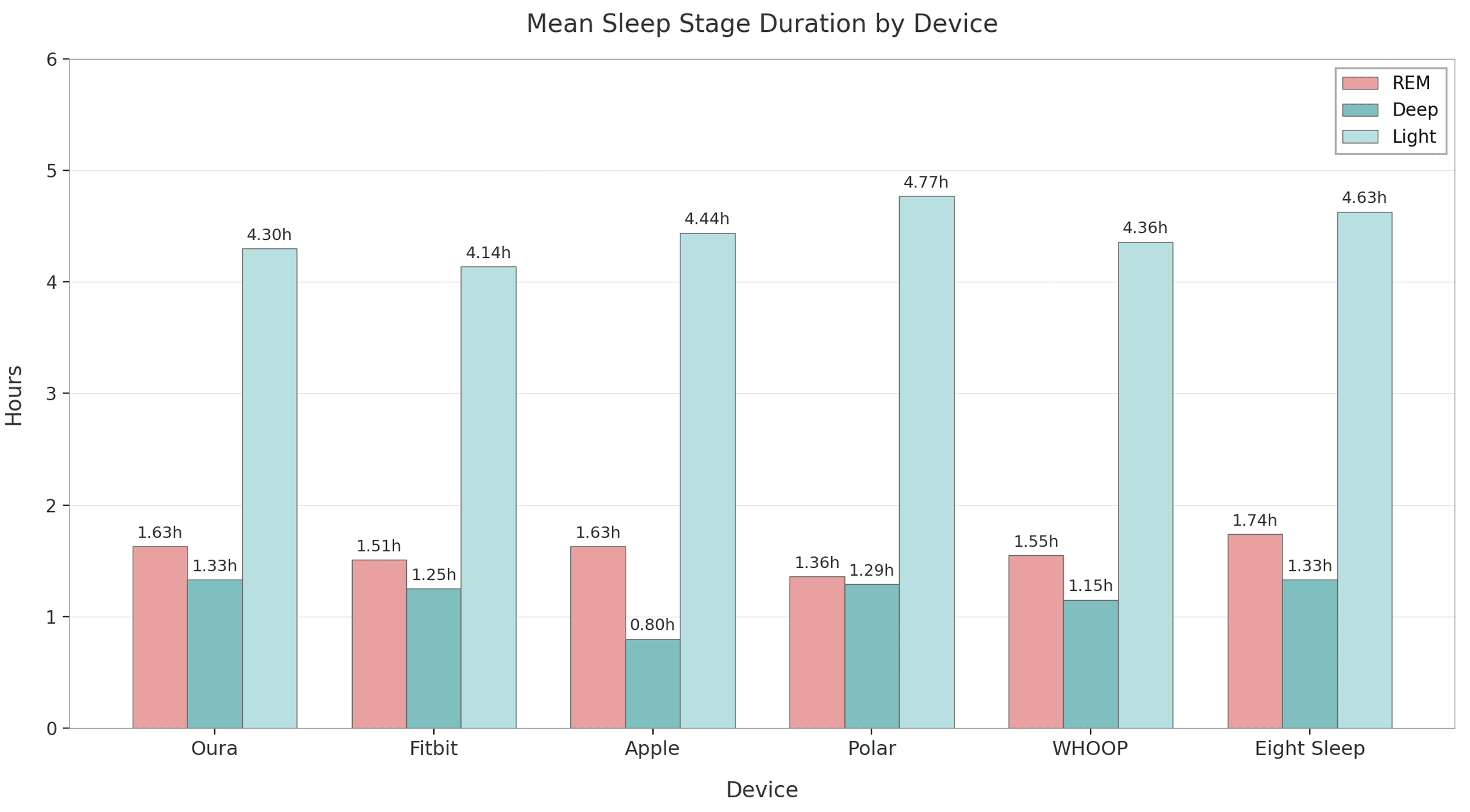

Across the six manufacturers, mean total sleep time (TST) ranges from 6.89 to 7.65 hours—encouragingly close to the 7-9 hours recommended by sleep experts for cognitive health, immune function, and mood regulation. Wearable users, often more health-aware than the general population (where averages dip below 7 hours in many surveys), seem to prioritize sleep moderately well.

We’d expect this, as the sample is taken from a population that we can be confident are relatively (compared to the general population) interested in their health.

Sleep stages follow expected physiology: light dominates (~60%), REM ~20-25% (1.3-1.7 hours), and deep varies most (0.8-1.3 hours). Deep sleep's variability highlights a known challenge: consumer devices rely on heart rate, movement, and sometimes temperature, but struggle to match the EEG sensitivity of lab polysomnography for slow-wave detection.

Apple's low deep sleep aligns with peer-reviewed validation studies showing wrist-based actigraphy often underestimates slow-wave sleep compared to PSG [1][2]. Conversely, Oura's higher deep sleep reporting has been found closer to gold standards in multiple independent validations [3][4].

WHOOP slots in comfortably, with averages reflecting its athlete-focused user base—decent duration but not outlier-long.

Direct Multi-Device Comparisons: Isolating Device Differences

Multi-device comparison is the true gold standard when we examine nights when the same individual uses multiple devices simultaneously: here, by perfectly controlling for the person, their physiology, bedtime habits, bedroom environment, and the exact sleep episode, we isolate pure device-to-device differences.

This direct, within-subject approach - rare in consumer tech analyses due to the need for real-world overlap data - offers unparalleled clarity on how algorithms interpret identical physiological signals, revealing genuine biases in total sleep time detection and stage classification that aggregated population data can only hint at.

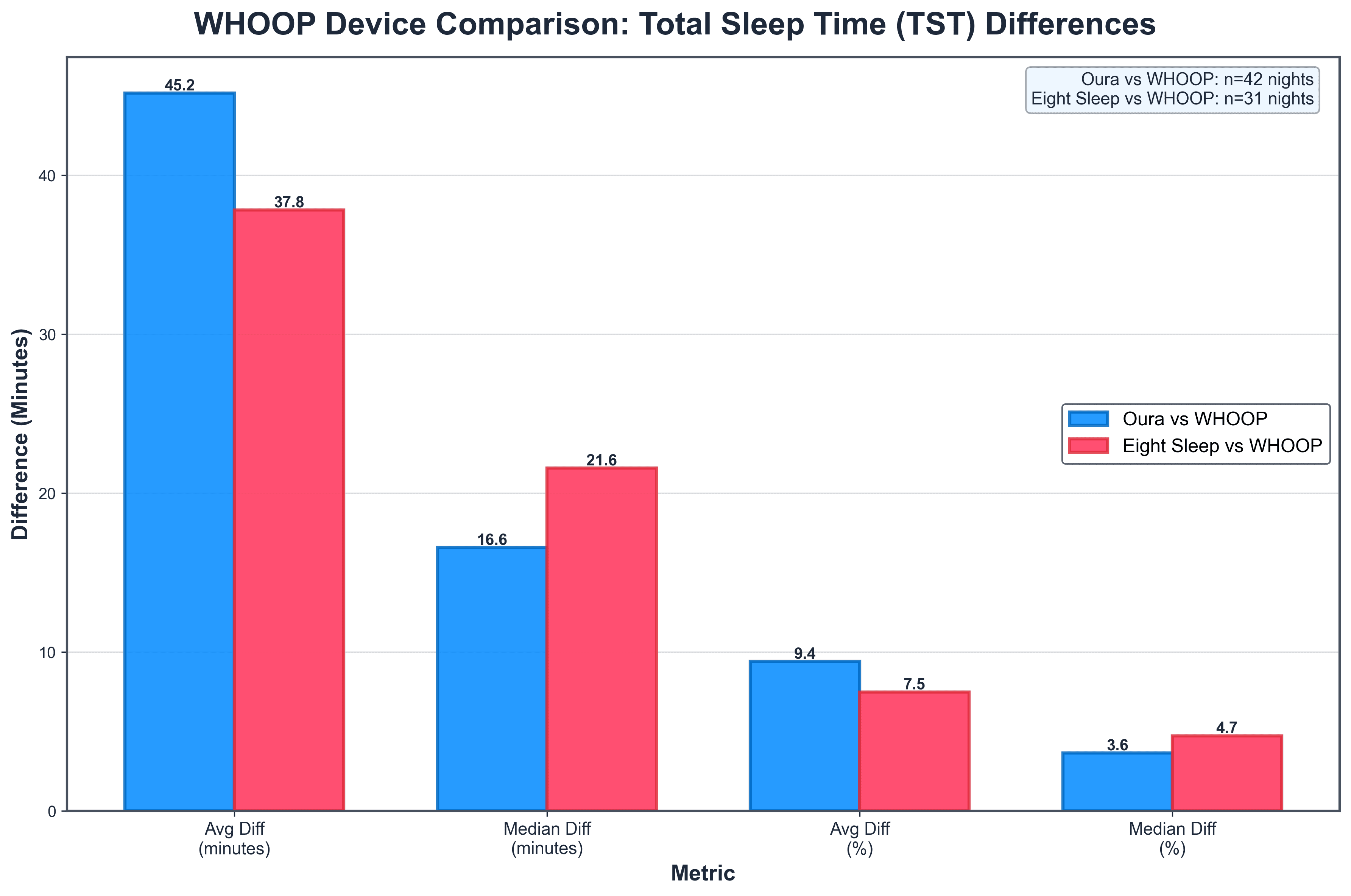

In the multi-device sleep tracker comparisons (such as Oura vs. WHOOP or Eight Sleep vs. WHOOP), the average total sleep time (TST) differences often appear substantially higher—around 38–45 minutes—compared to the much lower median differences of just 17–22 minutes. This discrepancy arises because the data is positively skewed: on most nights, the devices agree quite closely (differences under 20–30 minutes), pulling the median (the middle value when sorted) downward to reflect typical performance.

However, a smaller number of outlier nights—where one device significantly over- or under-estimates sleep due to factors like movement artefacts, poor sensor contact, or algorithmic edge cases—can produce large deviations (60+ minutes).

These extremes heavily inflate the average (mean), which is sensitive to outliers, while the median remains robust and more representative of what users experience on an average night. In sleep tracking research, this pattern is common, which is why medians are often preferred for assessing day-to-day reliability.

From 725 users contributing 2,144 overlapping nights (mostly dual-device), we gain clearer device insights into how devices compare. We only ran comparisons on pairs with 20+ nights of data:

- Oura vs. WHOOP (42 nights from multiple users):

- Median TST difference: 16.6 minutes (average 45.2 minutes)

- WHOOP higher TST on 57.1% of nights

- Per-user averages ranged 7.2–86.4 minutes difference; most showed night-to-night variability (71.4% mixed direction)

- Eight Sleep vs. WHOOP (31 nights):

- Median TST difference: 21.6 minutes (average 37.8 minutes)

- WHOOP higher on 80.6% of nights—a more consistent bias

- Some users (40%) always had WHOOP higher; others mixed

Direct comparisons between WHOOP and Oura (across 42 nights) and between WHOOP and Eight Sleep (31 nights) reveal deeper insights into device-specific biases and user variability in sleep tracking. Notably, WHOOP tends to report longer total sleep time (TST) more frequently—57% of nights against Oura and a striking 81% against Eight Sleep—suggesting WHOOP's strap-based algorithm may be more lenient in classifying quiet wakefulness as sleep, perhaps due to its focus on athlete recovery metrics. However, median TST differences remain modest (under 25 minutes), indicating strong overall agreement for most nights, while larger average differences highlight occasional outliers influenced by factors like fit or movement.

Sleep stage discrepancies are even more pronounced, with average gaps exceeding 250 minutes for light sleep, underscoring algorithmic divergences: Oura's ring sensors (incorporating temperature) appear to detect more nuanced transitions, while Eight Sleep's bed-based system emphasizes REM, potentially overestimating it relative to WHOOP. User-level patterns add nuance—71% of Oura-WHOOP users show mixed nightly biases, versus 40% of Eight Sleep-WHOOP users, where WHOOP consistently over reports—implying individual physiology or sleep position plays a role.

Broader Implications and Practical Advice

Combining both lenses: Population data shows tracker users sleeping ~7 hours with stage proportions broadly sensible, but direct comparisons reveal TST reliability within ~20 minutes across brands—reassuring for duration tracking—while stages remain interpretive.

For WHOOP fans: Your device performs comparably in aggregates and shows modest biases in direct matchups, often edging longer TST.

Ultimately, these tools motivate better habits and reveal patterns (e.g., weekend recovery). But discrepancies remind us to Focus on subjective refreshment rather than chasing identical scores. Avoid "orthosomnia"—stress from imperfect data!

References

[1] de Zambotti, Massimiliano, et al. “Wearable Sleep Technology in Clinical and Research Settings.” Medicine & Science in Sports & Exercise, 2019, https://pubmed.ncbi.nlm.nih.gov/30789439/.

[2] Walch, Olivia J., et al. “Sleep Stage Prediction with Raw Acceleration and Photoplethysmography Heart Rate Data Derived from a Consumer Wearable Device.” Sleep, vol. 42, no. 12, 2019, article zsz180, https://academic.oup.com/sleep/article/42/12/zsz180/5549536.

[3] Altini, Marco, and Hannu Kinnunen. “The Promise of Sleep: A Multi-Sensor Approach for Accurate Sleep Stage Detection Using the Oura Ring.” Sensors, vol. 21, no. 13, 2021, article 4302, https://www.mdpi.com/1424-8220/21/13/4302.

[4] Asgari Mehrabadi, Mohammad, et al. “Accuracy Assessment of Oura Ring Nocturnal Heart Rate and Heart Rate Variability in Comparison With Electrocardiography.” Journal of Medical Internet Research, vol. 24, no. 1, 2022, article e27487, https://www.jmir.org/2022/1/e27487/.